The power of inference-time compute Part 1

How inference can revolutionize business AI economics

The AI world is shifting to inference. That’s the piece of the AI system that makes the real difference. In this 3 part series we will look at how the decisions you make about inference can impact your business metrics. This first article, Part 1, discusses the economics behind inference. In Part 2 we will share some research results from running various inference time compute algorithms against CRMArena benchmarks for various models, to highlight the impact algorithmic choices make over model choices. In Part 3 we will cover future directions and our next steps in this research, and provide instructions to try out our ITC Studio and play around with inference algorithms.

The Shift from Bigger to Smarter

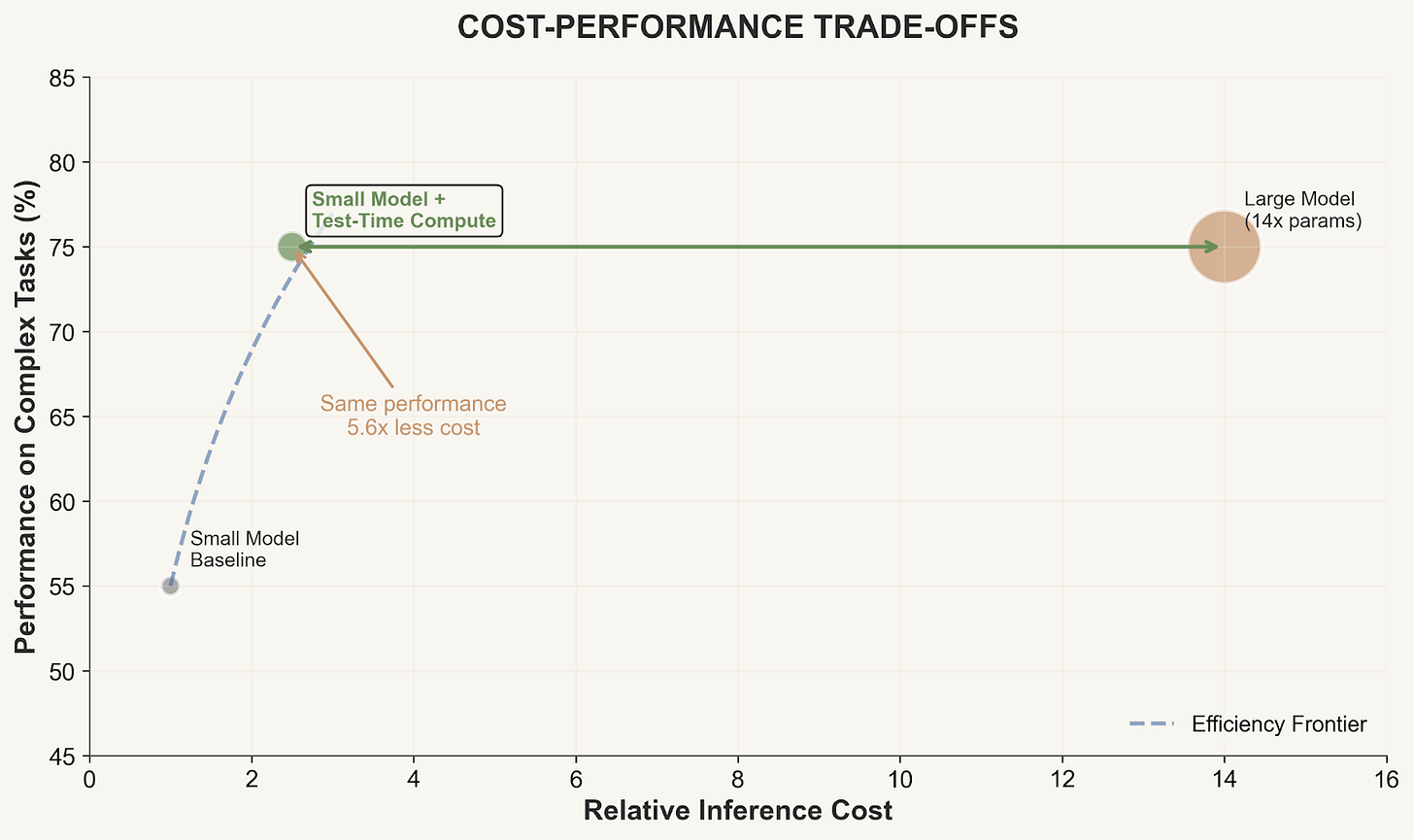

The AI industry is undergoing a fundamental transition. After years of investing billions in ever-larger models, enterprises can now unlock superior performance at lower cost by optimizing inference instead of model size. Recent breakthroughs demonstrate that inference-time compute (ITC) strategies enable smaller models to outperform systems many times their size. Combined with plummeting inference costs and surging enterprise AI spending, this creates a rare opportunity for businesses to achieve both performance and cost advantages, if they orchestrate effectively.

The Cost-Performance Paradox

Inference costs for GPT-3.5-level performance dropped 280× from late 2022 to late 2024. Hardware costs decline ~30% annually and energy efficiency improves ~40% annually. Yet enterprise AI spending continues to accelerate, with average monthly costs projected to rise from $62,000 in 2024 to $85,000 in 2025.

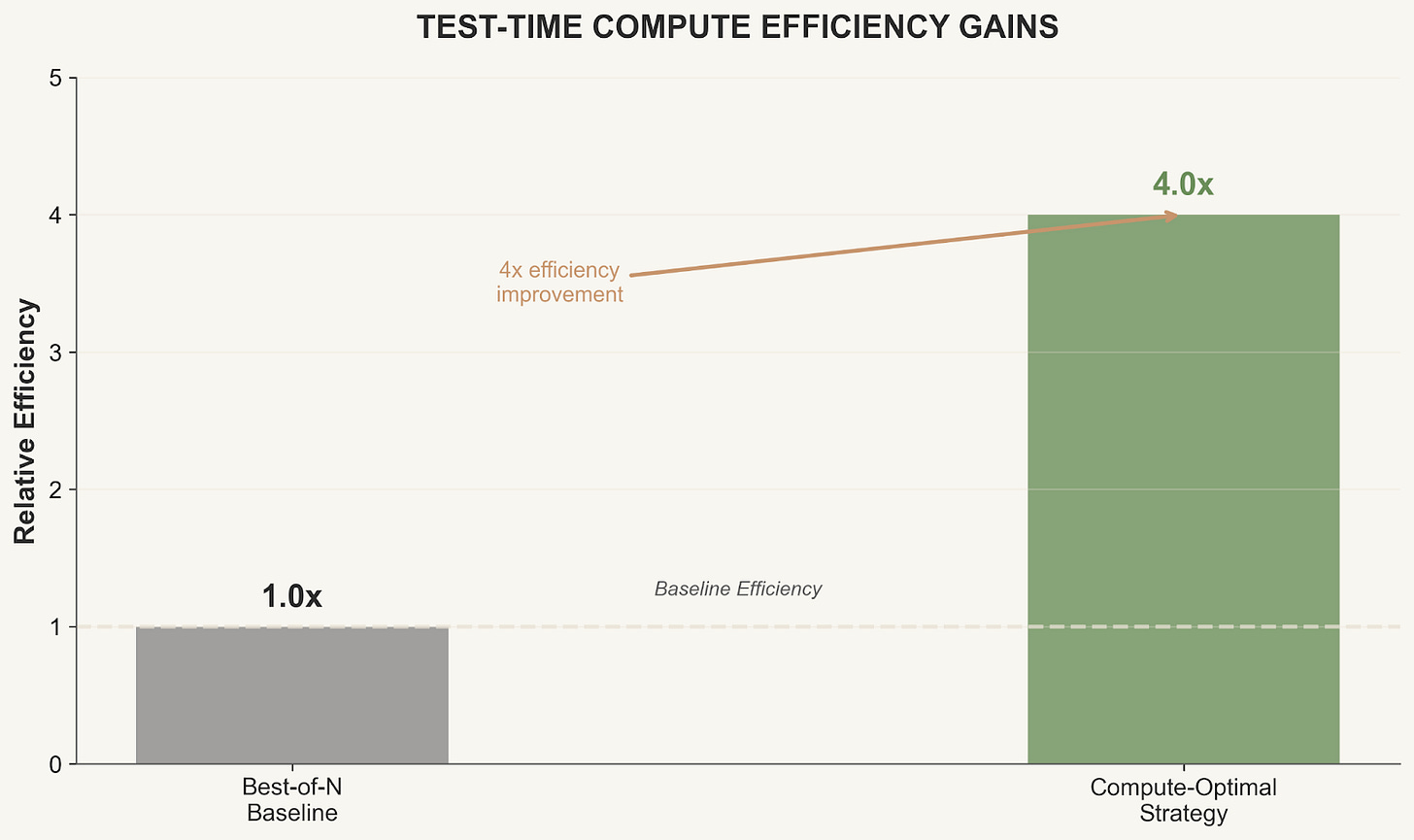

This paradox reveals the core opportunity, organizations are paying premium prices for computational brute force when intelligent inference strategies could deliver superior results at far lower cost. Stanford research shows that inference-time compute can be more effective than simply scaling model parameters, directly challenging the assumption that bigger models automatically produce better outcomes.

What Is inference-time Compute?

inference-time compute isn’t an incremental improvement, it’s a shift in how AI systems process information. Rather than relying solely on a model’s pre-trained capabilities, TTC strategies apply additional computation during inference to improve reasoning quality. Iterative refinement during inference (as demonstrated by Google’s Deep Researcher with inference-time Diffusion) proves that “thinking longer” at runtime can outperform training-time brute force.

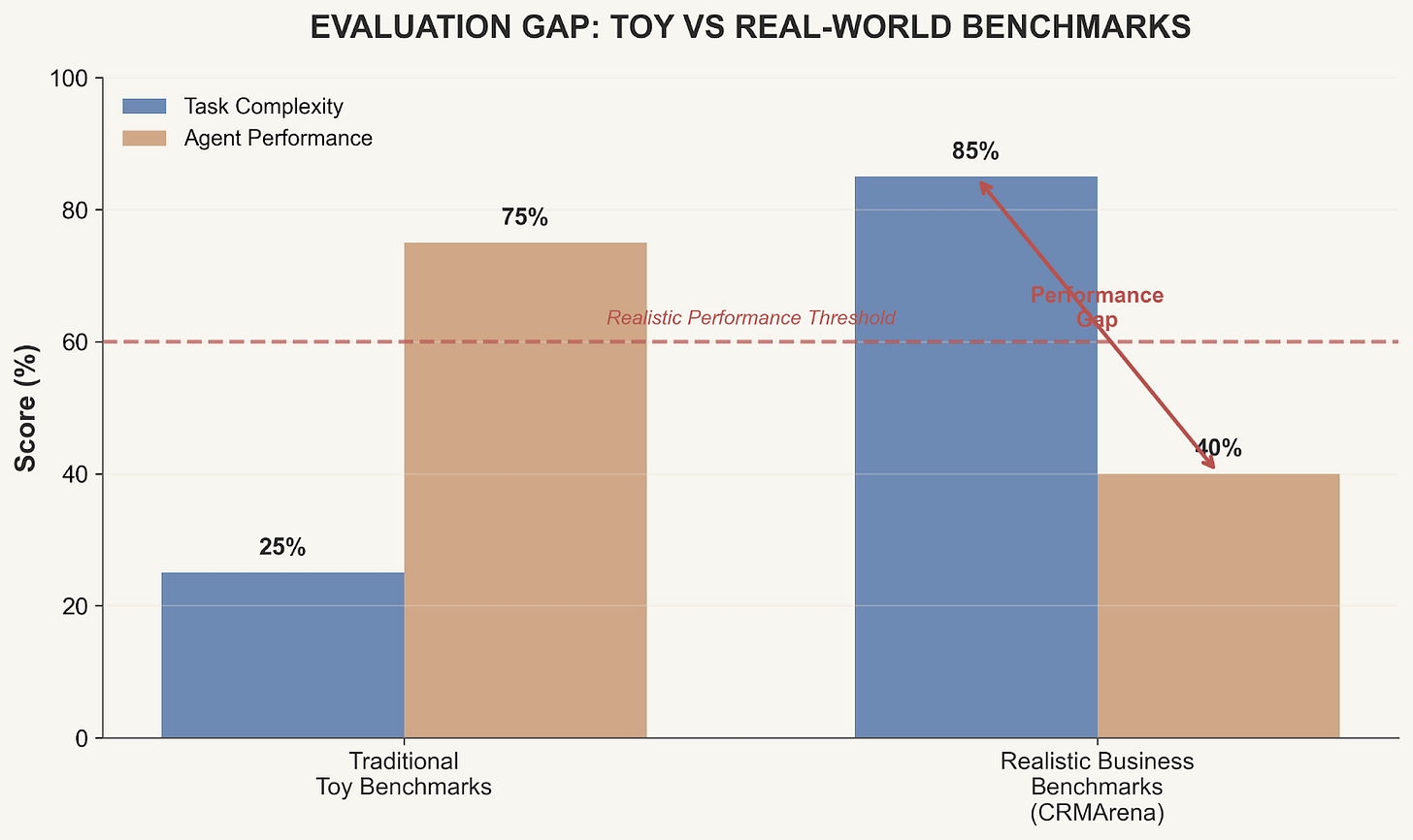

Bridging the Lab-to-Business Gap

Academic benchmarks emphasize technical metrics over human-centered or economic outcomes, creating a gap between academic success and business performance. Even frontier models that ace standardized tests can struggle with contextual decision-making in live business environments. inference-time compute closes this gap by making AI systems more adaptive and reliable under real-world constraints. One of the things we are focused on at Neurometric is making sure we use real world benchmarks that map to common work tasks.

Common ITC Algorithms

Our platform makes it easy to build, test, and deploy common inference-time compute algorithms. Intelligent inference-time compute strategies rely on several proven techniques. There are dozens, with more being discovered every day, but below is a sample of the most common ones:

Beam search: Explores multiple answer paths through an LLM simultaneously, keeping the most promising branches.

Chain of thought: Forces models to generate step-by-step reasoning before the final answer.

Tree of thought: Branches multiple reasoning paths and prunes them based on intermediate results.

Best-of-n: Generates several candidate answers and selects the best using a scoring function like majority vote or some other kind of weighting criteria.

Verifier models: Uses secondary models to validate or critique primary model outputs. These models can be SLMs or LLMs trained for the task, or generally available advanced LLMs from frontier model labs.

All of these methods improve performance by increasing reasoning depth at runtime. They allow the machine to “think” longer by applying these various thinking algorithms to the given input. Neurometric’s core technology helps companies to choose these algorithms to match them best to their problems, models, inputs, and benchmarks. It’s a systems level optimization tool, not a model level optimization tool.

The Implementation Challenge

Performance depends on benchmarks and models: Different algorithms work better for different tasks and model sizes. For example, our research shows that chain of thought may not be effective on small models. Figuring out which algorithms work and which don’t is difficult for most companies. Without consistent testing frameworks and evals, enterprises struggle to determine which algorithms work best in which contexts and per use case.

Early adopters who implement a neutral, programmable control plane will achieve sustainable cost and performance advantages. These are the companies we are working with.

Our Evaluation Framework

We’re launching an evaluation framework designed specifically to test ITC methods across models and tasks. This will help enterprises understand which algorithms work best in which contexts, enabling them to balance accuracy, cost, and latency effectively.

If you are a company that is implementing a multi-model AI system, we would love to help you think through the right ITC algorithms, token thinking budgets, and other factors to optimized that system for your business metrics.